TD-OFDFT Tutorials¶

This is the first tutorial in running TD-OFDFT with DFTpy. In this tutorial, you will learn:

How to run an TD-OFDFT calculation starting from the ground-state density

How to use the Dynamics class to write a real-time propagation runner

How to use predictor-correctors

How to add the nonadiabatic functional as a correction

How to run an TD-OFDFT calculation starting from the ground-state density from a more accurate functional

A simple example using adiabatic TFW functional¶

First we need to load the necessary modules

[1]:

import numpy as np

from dftpy.grid import DirectGrid

from dftpy.field import DirectField

from dftpy.functional import Functional, TotalFunctional

from dftpy.optimization import Optimization

from dftpy.constants import LEN_CONV, ENERGY_CONV

from dftpy.formats.vasp import read_POSCAR

from dftpy.td.propagator import Propagator

from dftpy.td.hamiltonian import Hamiltonian

from dftpy.utils.utils import calc_rho, calc_j

from dftpy.td.utils import initial_kick

The next step is to prepare the structure of the system. We load the system structure from a vasp POSCAR file and set the grid size to 36 by 36 by 32. We then initialized the density to be uniform electron gas.

[2]:

DATA='../DATA/'

structure_file = DATA+'Mg8.vasp'

atoms = read_POSCAR(structure_file, names=['Mg'])

PP_list = {'Mg':DATA+'Mg_OEPP_PZ.UPF'}

nr = [36, 36, 32]

grid = DirectGrid(atoms.cell.lattice, nr, atoms.cell.origin)

nelec = 16

rho_ini = np.ones(nr)

rho_ini = DirectField(grid=grid, griddata_3d=rho_ini)

rho_ini = rho_ini / rho_ini.integral() * nelec

Next, we set up the functionals,

[3]:

ke = Functional(type='KEDF',name='TFvW')

xc = Functional(type='XC',name='LDA')

hartree = Functional(type='HARTREE')

pseudo = Functional(type='PSEUDO', grid=grid, ions=atoms, PP_list=PP_list)

totalfunctional = TotalFunctional(KineticEnergyFunctional=ke,

XCFunctional=xc,

HARTREE=hartree,

PSEUDO=pseudo

)

setting key: Mg -> ../DATA/Mg_OEPP_PZ.UPF

and the optimizer

[4]:

optimization_options = {

'econv' : 1e-10 * nelec, # Energy Convergence (a.u./atom)

'maxfun' : 50, # For TN method, it's the max steps for searching direction

'maxiter' : 100, # The max steps for optimization

}

opt = Optimization(EnergyEvaluator=totalfunctional, optimization_options = optimization_options,

optimization_method = 'TN')

We optimize the ground state density.

[5]:

rho0 = opt.optimize_rho(guess_rho=rho_ini)

Step Energy(a.u.) dE dP Nd Nls Time(s)

0 -8.387459237318E-01 -8.387459E-01 2.343046E+00 1 1 4.494858E-02

!WARN: Change to steepest decent

1 -1.475975310796E+01 -1.392101E+01 1.122103E+00 1 4 1.567113E-01

2 -1.578181336422E+01 -1.022060E+00 1.518278E-01 4 2 2.549005E-01

3 -1.592678257192E+01 -1.449692E-01 1.739022E-02 9 1 3.848913E-01

4 -1.593537506750E+01 -8.592496E-03 1.159538E-03 5 1 4.771643E-01

5 -1.593687347295E+01 -1.498405E-03 1.868779E-04 9 2 6.142349E-01

6 -1.593712492567E+01 -2.514527E-04 3.070819E-05 10 2 7.585421E-01

7 -1.593715679007E+01 -3.186440E-05 5.229442E-06 8 2 8.843396E-01

8 -1.593716394287E+01 -7.152798E-06 8.490619E-07 10 2 1.027003E+00

9 -1.593716491926E+01 -9.763947E-07 1.526498E-07 8 2 1.163106E+00

10 -1.593716513241E+01 -2.131436E-07 2.678425E-08 10 2 1.310064E+00

11 -1.593716516249E+01 -3.007881E-08 4.482791E-09 8 2 1.437781E+00

12 -1.593716516886E+01 -6.378199E-09 7.565144E-10 10 2 1.579626E+00

13 -1.593716516973E+01 -8.685905E-10 1.405173E-10 9 2 1.714983E+00

14 -1.593716516989E+01 -1.567066E-10 5.893758E-11 11 2 1.865777E+00

#### Density Optimization Converged ####

Chemical potential (a.u.): -0.1288498705012976

Chemical potential (eV) : -3.5061832308475007

Now come the TD part. The first thing we need to do is to change the KE functional in the total functional to Pauli functional, e.g., remove the von Weizacker part, because it’s handled by the Laplacian part of the Hamiltonian.

[6]:

ke.options.update({'y':0}) # Kinetic energy functionals with the form xTF+yvW+something has the options x and y which controls how much TF or vW in the functional. Setting y=0 removes the vW part from the functional

We then apply a kick on the ground state density, and prepare the Hamiltonian and the propagator.

[7]:

direction = 0 # 0, 1, 2 means x, y, z-direction, respectively

k = 1.0e-1 # kick_strength in a.u.

psi = initial_kick(k, direction, np.sqrt(rho0))

j0 = calc_j(psi)

potential = totalfunctional(rho0, current=j0, calcType=['V']).potential

hamiltonian = Hamiltonian(v=potential)

interval = 1 # time interval in a.u. Note this is a relatively large time step. In real calculations you typically want a smaller time step like 1e-1 or 1e-2.

prop = Propagator(hamiltonian, interval, name='crank-nicholson')

Now comes the actual propagation. The easiest way to run it is to use a for loop.

[8]:

max_steps = 50

for i_t in range(max_steps):

psi, info = prop(psi)

rho = calc_rho(psi)

j = calc_j(psi)

potential = totalfunctional(rho, current=j, calcType=['V']).potential

prop.hamiltonian.v = potential

We can check the observables, for example, the dipole moment, at the end of the propagation.

[9]:

delta_rho = rho - rho0

delta_mu = (delta_rho * delta_rho.grid.r).integral()

print(delta_mu)

[ 3.09496798 -0.22498292 -0.24091637]

Alternatively, we can make a class as a child class of Dynamics. This is a more object oriented way of running propagations and also allows us to attach any observation function to record whatever intermediate result we need at each time step.

[10]:

from dftpy.optimize import Dynamics

class Runner(Dynamics):

def __init__(self, rho0, totalfunctional, k, direction, interval, max_steps):

super(Runner, self).__init__()

self.max_steps = max_steps

self.totalfunctional = totalfunctional

self.rho0 = rho0

self.rho = rho0

self.psi = initial_kick(k, direction, np.sqrt(self.rho0))

self.j = calc_j(self.psi)

potential = self.totalfunctional(self.rho0, current=self.j, calcType=['V']).potential

hamiltonian = Hamiltonian(v=potential)

self.prop = Propagator(hamiltonian, interval, name='crank-nicholson')

self.dipole = []

self.attach(self.calc_dipole) # this attaches the calc_dipole function to the observers list which runs after each time step.

def step(self):

self.psi, info = self.prop(self.psi)

self.rho = calc_rho(self.psi)

self.j = calc_j(self.psi)

potential = self.totalfunctional(self.rho, current=self.j, calcType=['V']).potential

self.prop.hamiltonian.v = potential

def calc_dipole(self):

delta_rho = self.rho - self.rho0

delta_mu = (delta_rho * delta_rho.grid.r).integral()

self.dipole.append(delta_mu)

Now that we made the class, we can create an instance of the class and run it.

[11]:

runner = Runner(rho0, totalfunctional, k, direction, interval, max_steps)

runner()

[11]:

False

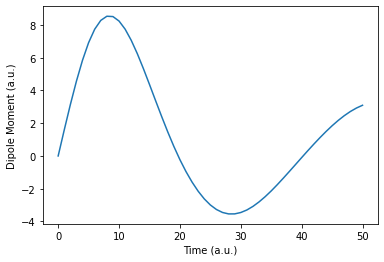

We can plot how the dipole moment changes with time with matplotlib.

[12]:

import matplotlib.pyplot as plt

t = np.linspace(0, interval * max_steps, max_steps + 1)

plt.plot(t, [mu[0] for mu in runner.dipole])

plt.xlabel('Time (a.u.)')

plt.ylabel('Dipole Moment (a.u.)')

plt.show()

Introduction of the predictor-corrector¶

A predictor-corrector can be used to greatly improve the accuracy of the propagation without the need of using a small time step. DFTpy offers a PredictorCorrector class. Here is an example how to use the PredictorCorrector class for real-time propagation.

[13]:

from dftpy.td.predictor_corrector import PredictorCorrector

class Runner2(Dynamics):

def __init__(self, rho0, totalfunctional, k, direction, interval, max_steps):

super(Runner2, self).__init__()

self.max_steps = max_steps

self.totalfunctional = totalfunctional

self.rho0 = rho0

self.rho = rho0

self.psi = initial_kick(k, direction, np.sqrt(self.rho0))

self.j = calc_j(self.psi)

potential = self.totalfunctional(self.rho0, current=self.j, calcType=['V']).potential

hamiltonian = Hamiltonian(v=potential)

self.prop = Propagator(hamiltonian, interval, name='crank-nicholson')

self.dipole = []

self.attach(self.calc_dipole)

self.predictor_corrector = None

def step(self):

self.predictor_corrector = PredictorCorrector(self.psi, propagator=self.prop, max_steps=2, functionals=totalfunctional)

self.predictor_corrector()

self.psi = self.predictor_corrector.psi_pred

self.rho = self.predictor_corrector.rho_pred

self.j = self.predictor_corrector.j_pred

def calc_dipole(self):

delta_rho = self.rho - self.rho0

delta_mu = (delta_rho * delta_rho.grid.r).integral()

self.dipole.append(delta_mu)

[14]:

runner2 = Runner2(rho0, totalfunctional, k, direction, interval, max_steps)

runner2()

[14]:

False

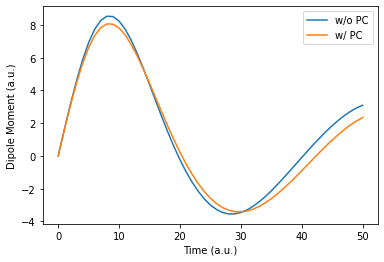

We can compare the dipole moment with and without the predictor-corrector. We can see noticable differences between them.

[15]:

t = np.linspace(0, interval * max_steps, max_steps + 1)

plt.plot(t, [mu[0] for mu in runner.dipole], label='w/o PC')

plt.plot(t, [mu[0] for mu in runner2.dipole], label='w/ PC')

plt.xlabel('Time (a.u.)')

plt.ylabel('Dipole Moment (a.u.)')

plt.legend()

plt.show()

To check whether the predictor-corrector improves the result, we can run a propagation with the time-step 1/10 of the original one,

[16]:

runner1b = Runner(rho0, totalfunctional, k, direction, interval / 10, max_steps)

runner1b()

[16]:

False

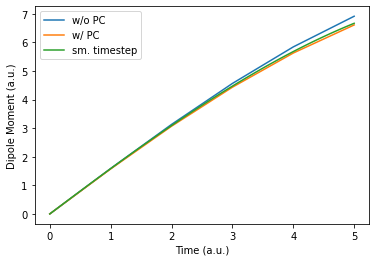

and we find the result is very close to the one with the predictor-corrector!

[17]:

t = np.linspace(0, interval * (max_steps // 10), max_steps // 10 + 1)

t2 = np.linspace(0, interval * (max_steps // 10), max_steps + 1)

plt.plot(t, [mu[0] for mu in runner.dipole][:max_steps // 10 + 1], label='w/o PC')

plt.plot(t, [mu[0] for mu in runner2.dipole][:max_steps // 10 + 1], label='w/ PC')

plt.plot(t2, [mu[0] for mu in runner1b.dipole], label='sm. timestep')

plt.xlabel('Time (a.u.)')

plt.ylabel('Dipole Moment (a.u.)')

plt.legend()

plt.show()

Using nonadiabatic functionals¶

In many scenarios to achieve good result in TD-OFDFT, non-adiabatic Pauli potential is required. To use nonadiabatic functionals, one can simply create an instance of the functional and add it to the total functionals.

[18]:

dyn = Functional(type='DYNAMIC', name='JP1')

totalnonadiabatic = TotalFunctional(KineticEnergyFunctional=ke,

XCFunctional=xc,

HARTREE=hartree,

PSEUDO=pseudo,

Nonadiabatic=dyn

)

runner2b = Runner2(rho0, totalnonadiabatic, k, direction, interval, max_steps)

runner2b()

[18]:

False

However, sometimes the above method can cause numerical instablities. In that case, one can use the nonadiabatic functional as a correction, e.g., for each time step, run a normal propagation without the nonadiabatic functional, then run 1st order Taylor propagator with just the nonadiabatic functional after it. Keep in mind this approximation requires the time step to be small, that’s why we use time step=0.1 here.

[19]:

from dftpy.td.utils import PotentialOperator

class Runner3(Runner2):

def __init__(self, rho0, totalfunctional, correction, k, direction, interval, max_steps):

super(Runner3, self).__init__(rho0, totalfunctional, k, direction, interval, max_steps)

self.correction = correction

correct_potential = self.correction(self.rho0 , current=self.j, calcType=['V']).potential

self.taylor = Propagator(name='taylor', hamiltonian=PotentialOperator(v=correct_potential), interval=interval, order=1)

self.N = self.rho0.integral()

self.interval = interval

def step(self):

correct_potential = self.correction(self.rho , current=self.j, calcType=['V']).potential

self.taylor.hamiltonian.v = correct_potential

super(Runner3, self).step()

self.psi, info = self.taylor(self.psi)

self.rho = calc_rho(self.psi)

self.j = calc_j(self.psi)

[20]:

runner3 = Runner3(rho0, totalfunctional, dyn, k, direction, interval / 10, max_steps)

runner3()

[20]:

False

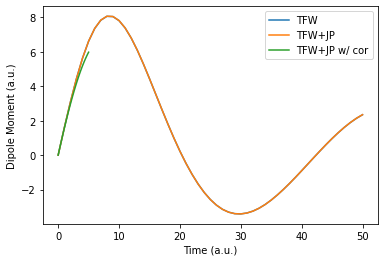

We can notice the effects of the nonadiabatic potential.

[21]:

t = np.linspace(0, interval * max_steps, max_steps + 1)

t2 = np.linspace(0, interval / 10 * max_steps, max_steps + 1)

plt.plot(t, [mu[0] for mu in runner2.dipole], label='TFW')

plt.plot(t, [mu[0] for mu in runner2b.dipole], label='TFW+JP')

plt.plot(t2, [mu[0] for mu in runner3.dipole], label='TFW+JP w/ cor')

#plt.plot(t, mua-mub)

plt.xlabel('Time (a.u.)')

plt.ylabel('Dipole Moment (a.u.)')

plt.legend()

plt.show()

Starting with more accurate ground-state density¶

Another way to improve the result is to use nonlocal KEDFs for the adiabatic part of the Pauli potential. One can do it by directly replace the ke object with nonlocal functionals in the previous examples. However, nonlocal functionals can be quite costy. To reduce the cost, we can use the nonlocal functionals for just the ground state and do the propagation with TFW. Here is an example of how to set it up.

First we run a ground state optimization with LMGP functional.

[22]:

lmgp = Functional(type='KEDF',name='LMGP', kfmin=1e-3, kfmax=10, kdd=2)

totalfunctionallmgp = TotalFunctional(KineticEnergyFunctional=lmgp,

XCFunctional=xc,

HARTREE=hartree,

PSEUDO=pseudo

)

optimization_options = {

'econv' : 1e-5 * nelec, # Energy Convergence (a.u./atom)

'maxfun' : 50, # For TN method, it's the max steps for searching direction

'maxiter' : 100, # The max steps for optimization

}

opt = Optimization(EnergyEvaluator=totalfunctionallmgp, optimization_options = optimization_options,

optimization_method = 'CG-HS')

rho0_lmgp = opt.optimize_rho(guess_rho=rho_ini)

Step Energy(a.u.) dE dP Nd Nls Time(s)

0 -8.387459237318E-01 -8.387459E-01 2.343046E+00 1 1 2.884568E+00

1 -1.515142866267E+01 -1.431268E+01 1.442264E+00 1 4 6.044688E+00

2 -1.633464139833E+01 -1.183213E+00 8.900099E-01 1 1 6.867623E+00

3 -1.651334790252E+01 -1.787065E-01 2.773575E-01 1 2 8.429926E+00

4 -1.660880578423E+01 -9.545788E-02 2.420474E-01 1 2 1.001886E+01

5 -1.667538730116E+01 -6.658152E-02 1.263650E-01 1 1 1.084262E+01

6 -1.670938973479E+01 -3.400243E-02 6.448108E-02 1 2 1.246324E+01

7 -1.672155137927E+01 -1.216164E-02 2.947864E-02 1 2 1.407281E+01

8 -1.673204801376E+01 -1.049663E-02 4.269679E-02 1 3 1.649048E+01

9 -1.674553627957E+01 -1.348827E-02 3.452370E-02 1 3 1.894890E+01

10 -1.675450537821E+01 -8.969099E-03 3.140962E-02 1 1 1.978981E+01

11 -1.675805544749E+01 -3.550069E-03 7.895581E-03 1 2 2.139308E+01

12 -1.675964076848E+01 -1.585321E-03 2.946663E-03 1 2 2.298218E+01

13 -1.676044496700E+01 -8.041985E-04 2.973395E-03 1 1 2.379298E+01

14 -1.676145278756E+01 -1.007821E-03 2.808021E-03 1 2 2.532127E+01

15 -1.676241551919E+01 -9.627316E-04 2.098251E-03 1 1 2.611279E+01

16 -1.676311400010E+01 -6.984809E-04 1.240467E-03 1 2 2.767714E+01

17 -1.676366700940E+01 -5.530093E-04 1.395018E-03 1 1 2.847266E+01

18 -1.676407994490E+01 -4.129355E-04 7.074610E-04 1 2 3.004532E+01

19 -1.676425795730E+01 -1.780124E-04 4.623685E-04 1 2 3.157964E+01

20 -1.676430106608E+01 -4.310878E-05 5.137061E-04 1 1 3.236226E+01

21 -1.676430341157E+01 -2.345489E-06 2.439966E-04 1 3 3.470306E+01

#### Density Optimization Converged ####

Chemical potential (a.u.): -0.14452301324630942

Chemical potential (eV) : -3.9326711276024007

To have the propagation run correctly, we need to introduce a potential equals the LMGP potential evaluated at the LMGP ground-state density minus the TFW potential evaluated at the same density.

[23]:

from dftpy.functional.external_potential import ExternalPotential

lmgp.options.update({'y':0})

vtf0 = ke(rho=rho0_lmgp, calcType = {'V'}).potential

vlmgp0 = lmgp(rho=rho0_lmgp, calcType = {'V'}).potential

ext = ExternalPotential(v=vlmgp0-vtf0)

totalfunctional.UpdateFunctional(newFuncDict={'ext':ext})

[24]:

runner2c = Runner2(rho0_lmgp, totalfunctional, k, direction, interval, max_steps)

runner2c()

[24]:

False

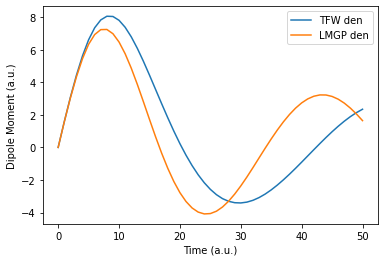

We can see using LMGP ground-state density as the initial condition makes a difference.

[25]:

t = np.linspace(0, interval * max_steps, max_steps + 1)

plt.plot(t, [mu[0] for mu in runner2.dipole], label='TFW den')

plt.plot(t, [mu[0] for mu in runner2c.dipole], label='LMGP den')

plt.xlabel('Time (a.u.)')

plt.ylabel('Dipole Moment (a.u.)')

plt.legend()

plt.show()